A new artificial intelligence (AI) model has achieved human-level results on a test designed to measure “general intelligence.”

On December 20, OpenAI’s O3 system scored 85% on the ARC-AGI benchmark, well above previous AI’s best score of 55% and on par with the average human score. He also scored well in a very difficult mathematics test.

Creating artificial general intelligence, or AGI, is a stated goal of all major AI research laboratories. At first glance, OpenAI has at least taken an important step toward this goal.

While skepticism remains, many AI researchers and developers feel that something has changed. For many, the possibility of AGI now seems more real, urgent, and closer than anticipated. Are they right?

generalization and intelligence

To understand what the o3 result means, you need to understand what the ARC-AGI test is. In technical terms, this is a test of the “sampling efficiency” of an AI system in adapting to something new – how many examples of a new situation does the system need to see to figure out how it works.

An AI system like ChatGPT (GPT-4) is not very sample efficient. It was “trained” on millions of examples of human text, producing probabilistic “rules” about which combinations of words are most likely.

The result is quite good in normal tasks. It is bad at unusual tasks, because it has less data (fewer samples) about those tasks.

Unless AI systems can learn from a smaller number of examples and adapt with greater sampling efficiency, they will only be used for very repetitive tasks and those where occasional failure is tolerable.

The ability to accurately solve previously unknown or novel problems from limited samples of data is known as the ability to generalize. It is widely considered an essential, even fundamental, element of intelligence.

Grid and Pattern

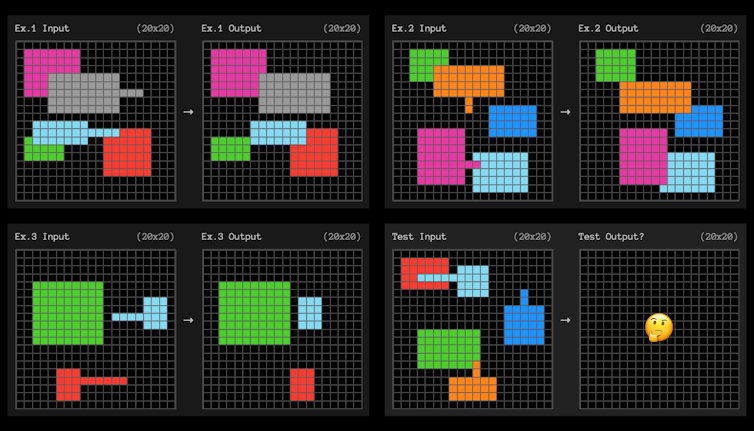

The ARC-AGI benchmark tests for efficient optimization of samples using small grid square problems, as shown below. The AI needs to find the pattern that transforms the grid on the left into the grid on the right.

Each question gives three examples to learn. The AI system then needs to figure out rules that “generalize” from the three examples to the fourth.

These are much like the IQ tests you may sometimes remember from school.

Weak rules and optimization

We don’t know exactly how OpenAI did this, but the results show that the o3 model is highly adaptable. From just a few examples, it finds rules that can be generalized.

To detect a pattern, we should not make any unnecessary assumptions, or be more specific than we really should be. In theory, if you can identify the “weakest” rules that do what you want, you have maximized your ability to adapt to new circumstances.

What do we mean by the weakest rules? The technical definition is complex, but weak rules are usually those that can be described in simple statements.

In the above example, a common English expression of the rule might be something like this: “Any shape with a raised line will move to the end of that line and ‘cover’ any other shapes that overlap with it.”

Looking for chains of thought?

Although we do not yet know how OpenAI achieved this result, it does not seem that they deliberately optimized the o3 system to find weak rules. However, to succeed in ARC-AGI tasks he must find them.

We know that OpenAI started with a general-purpose version of the O3 model (which is different from most other models, because it can spend more time “thinking” about difficult questions) and then specialized it. Trained for ARC-AGI testing.

French AI researcher Francois Chollet, who created the benchmark, believes that o3 searches through various “thought chains” describing the steps to solve the task. It will then select the “best” one according to some loosely defined rule, or “heuristic”.

It would be “not dissimilar” to how Google’s AlphaGo system searched through various possible sequences of moves to defeat the world Go champion.

You can think of these chains of thought like programs that fit into examples. Of course, if it’s like a go-playing AI, it needs a heuristic or loose rules to decide which program is best.

Thousands of different seemingly equally valid programs can arise. That heuristic could be “pick the weakest” or “pick the simplest.”

However, if it’s like AlphaGo then they simply had an AI that makes a guess. This was the process for AlphaGo. Google trained a model to rate different sequences of moves as better or worse than others.

what we still don’t know

So the question is, is it really close to AGI? If o3 works similarly, the underlying model may not be much better than previous models.

The concepts that the model learns from the language may be no more suitable for generalization than before. Instead, we can see a more generalized “chain of thought” found through additional stages of heuristic training specialized for this test. The proof, as always, will be in the pudding.

Almost everything about O3 is unknown. OpenAI has limited disclosure to a few media presentations and early testing by a handful of researchers, laboratories, and AI safety institutes.

Truly understanding the potential of o3 will require extensive work, including evaluation, understanding the distribution of its capabilities, how often it fails and how often it succeeds.

When the O3 is finally released, we’ll have a better idea of whether it’s nearly as adaptable as the average human.

If so, it could have a huge, revolutionary, economic impact, ushering in a new era of self-improving accelerated intelligence. We will need new standards for AGI and serious consideration of how it should be regulated.

If not, it would still be an impressive result. However, everyday life will remain much the same.![]()

,Author: Michael Timothy Bennett, PhD Student, School of Computing, Australian National University and Eliza Perrier, Research Fellow, Stanford Center for Responsible Quantum Technology, Stanford University,

,disclosure statement: Michael Timothy Bennett receives funding from the Australian Government. Eliza Perrier receives funding from the Australian Government)

This article is republished from The Conversation under a Creative Commons license. Read the original article.

(Except for the headline, this story has not been edited by NDTV staff and is published from a syndicated feed.)